A primary reason for thinking that assemblage theory is important is the fact that it offers new ways of thinking about social ontology. Instead of thinking of the social world as consisting of fixed entities and properties, we are invited to think of it as consisting of fluid agglomerations of diverse and heterogeneous processes. Manuel DeLanda's recent book Assemblage Theory sheds new light on some of the complexities of this theory.

Particularly important is the question of how to think about the reality of large historical structures and conditions. What is "capitalism" or "the modern state" or "the corporation"? Are these temporally extended but unified things? Or should they be understood in different terms altogether? Assemblage theory suggests a very different approach. Here is an astute description by DeLanda of historical ontology with respect to the historical imagination of Fernand Braudel:

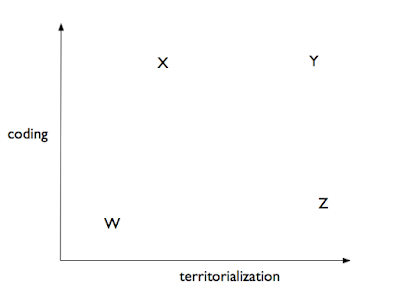

Braudel's is a multi-scaled social reality in which each level of scale has its own relative autonomy and, hence, its own history. Historical narratives cease to be constituted by a single temporal flow -- the short timescale at which personal agency operates or the longer timescales at which social structure changes -- and becomes a multiplicity of flows, each with its own variable rates of change, its own accelerations and decelerations. (14)DeLanda extends this idea by suggesting that the theory of assemblage is an antidote to essentialism and reification of social concepts:

Thus, both 'the Market' and 'the State' can be eliminated from a realist ontology by a nested set of individual emergent wholes operating at different scales. (16)I understand this to mean that "Market" is a high-level reification; it does not exist in and of itself. Rather, the things we want to encompass within the rubric of market activity and institutions are an agglomeration of lower-level concrete practices and structures which are contingent in their operation and variable across social space. And this is true of other high-level concepts -- capitalism, IBM, or the modern state.

DeLanda's reconsideration of Foucault's ideas about prisons is illustrative of this approach. After noting that institutions of discipline can be represented as assemblages, he asks the further question: what are the components that make up these assemblages?

The components of these assemblages ... must be specified more clearly. In particular, in addition to the people that are confined -- the prisoners processed by prisons, the students processed by schools, the patients processed by hospitals, the workers processed by factories -- the people that staff those organizations must also be considered part of the assemblage: not just guards, teachers, doctors, nurses, but the entire administrative staff. These other persons are also subject to discipline and surveillance, even if to a lesser degree. (39)So how do assemblages come into being? And what mechanisms and forces serve to stabilize them over time? This is a topic where DeLanda's approach shares a fair amount with historical institutionalists like Kathleen Thelen (link, link): the insight that institutions and social entities are created and maintained by the individuals who interface with them, and that both parts of this observation need explanation. It is not necessarily the case that the same incentives or circumstances that led to the establishment of an institution also serve to gain the forms of coherent behavior that sustain the institution. So creation and maintenance need to be treated independently. Here is how DeLanda puts this point:

So we need to include in a realist ontology not only the processes that produce the identity of a given social whole when it is born, but also the processes that maintain its identity through time. And we must also include the downward causal influence that wholes, once constituted, can exert on their parts. (18)Here DeLanda links the compositional causal point (what we might call the microfoundational point) with the additional idea that higher-level social entities exert downward causal influence on lower-level structures and individuals. This is part of his advocacy of emergence; but it is controversial, because it might be maintained that the causal powers of the higher-level structure are simultaneously real and derivative upon the actions and powers of the components of the structure (link). (This is the reason I prefer to use the concept of relative explanatory autonomy rather than emergence; link.)

DeLanda summarizes several fundamental ideas about assemblages in these terms:

- "Assemblages have a fully contingent historical identity, and each of them is therefore an individual entity: an individual person, an individual community, an individual organization, an individual city."

- "Assemblages are always composed of heterogeneous components."

- "Assemblages can become component parts of larger assemblages. Communities can form alliances or coalitions to become a larger assemblage."

- "Assemblages emerge from the interactions between their parts, but once an assemblage is in place it immediately starts acting as a source of limitations and opportunities for its components (downward causality)." (19-21)

Personal identity ... has not only a private aspect but also a public one, the public persona that we present to others when interacting with them in a variety of social encounters. Some of these social encounters, like ordinary conversations, are sufficiently ritualized that they themselves may be treated as assemblages. (27)Here DeLanda cites the writings of Erving Goffman, who focuses on the public scripts that serve to constitute many kinds of social interaction (link); equally one might refer to Andrew Abbott's processual and relational view of the social world and individual actors (link).

The most compelling example that DeLanda offers here and elsewhere of complex social entities construed as assemblages is perhaps the most complex and heterogeneous product of the modern world -- cities.

Cities possess a variety of material and expressive components. On the material side, we must list for each neighbourhood the different buildings in which the daily activities and rituals of the residents are performed and staged (the pub and the church, the shops, the houses, and the local square) as well as the streets connecting these places. In the nineteenth century new material components were added, water and sewage pipes, conduits for the gas that powered early street lighting, and later on electricity and telephone wires. Some of these components simply add up to a larger whole, but citywide systems of mechanical transportation and communication can form very complex networks with properties of their own, some of which affect the material form of an urban centre and its surroundings. (33)(William Cronon's social and material history of Chicago in Nature's Metropolis: Chicago and the Great West is a very compelling illustration of this additive, compositional character of the modern city; link. Contingency and conjunctural causation play a very large role in Cronon's analysis. Here is a post that draws out some of the consequences of the lack of systematicity associated with this approach, titled "What parts of the social world admit of explanation?"; link.)